4.6 Threading Issues

The fork() and exec() System Calls

If one thread in a program calls fork(), does the new process duplicate all threads, or is the new process single-threaded? Some UNIX systems have chosen to have two versions of fork(), one that duplicates all threads and another that duplicates only the thread that invoked the fork() system call.

Signal Handling

A signal is used in UNIX systems to notify a process that a particular event has occurred. A signal may be received either synchronously or asynchronously, depending on the source of and the reason for the event being signaled. All signals, whether synchronous or asynchronous, follow the same pattern:

- A signal is generated by the occurrence of a particular event.

- The signal is delivered to a process.

- Once delivered, the signal must be handled.

Typically, an asynchronous signal is sent to another process.

A signal may be handled by one of two possible handlers:

1. A default signal handler

2. A user-defined signal handler

Every signal has a default signal handler that the kernel runs when han- dling that signal. This default action can be overridden by a user-define signal handler that is called to handle the signal. Signals are handled in differ- ent ways. Some signals may be ignored, while others (for example, an illegal memory access) are handled by terminating the program.

Delivering signals is more compli- cated in multithreaded programs, where a process may have several threads. Where, then, should a signal be delivered?

In general, the following options exist:

- Deliver the signal to the thread to which the signal applies.

- Deliver the signal to every thread in the process.

- Deliver the signal to certain threads in the process.

- Assign a specific thread to receive all signals for the process.

Example of implementing signal handler.

https://www.thegeekstuff.com/2012/03/catch-signals-sample-c-code/

Linux Signals – Example C Program to Catch Signals (SIGINT, SIGKILL, SIGSTOP, etc.)

Linux Signals – Example C Program to Catch Signals (SIGINT, SIGKILL, SIGSTOP, etc.) by Himanshu Arora on March 9, 2012 In the part 1 of the Linux Signals series, we learned about the fundamental concepts behind Linux signals. Building on the previous par

www.thegeekstuff.com

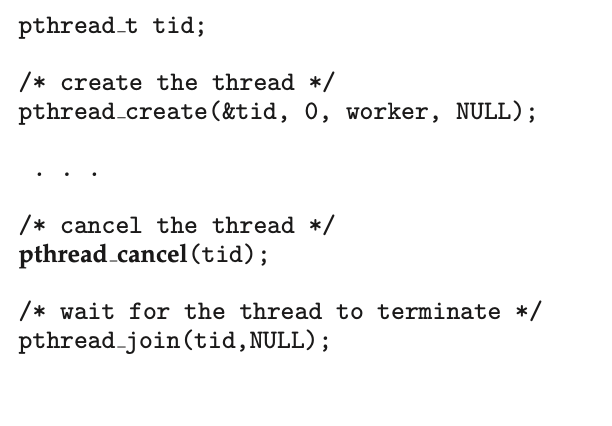

Thread Cancellation

Thread cancellation involves terminating a thread before it has completed.

A thread that is to be canceled is often referred to as the target thread. Cancellation of a target thread may occur in two different scenarios:

- Asynchronous cancellation. One thread immediately terminates the tar- get thread.

- Deferred cancellation. The target thread periodically checks whether it should terminate, allowing it an opportunity to terminate itself in an orderly fashion.

One technique for establishing a cancellation point is to invoke the pthread testcancel() function. If a cancellation request is found to be pending, the call to pthread testcancel() will not return, and the thread will terminate; otherwise, the call to the function will return, and the thread will continue to run. Additionally, Pthreads allows a function known as a cleanup handler to be invoked if a thread is canceled. This function allows any resources a thread may have acquired to be released before the thread is terminated.

An interesting note is that on Linux systems, thread cancellation using the Pthreads API is handled through signals .

Thread-Local Storage

Threads belonging to a process share the data of the process. Indeed, this data sharing provides one of the benefits of multithreaded programming. However, in some circumstances, each thread might need its own copy of certain data. We will call such data thread-local storage (or TLS).

It is easy to confuse TLS with local variables. However, local variables are visible only during a single function invocation, whereas TLS data are visible across function invocations.

Scheduler Activations

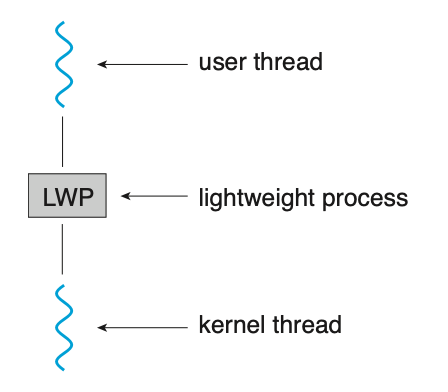

Many systems implementing either the many-to-many or the two-level model place an intermediate data structure between the user and kernel threads. This data structure—typically known as a lightweight process, or LWP.

To the user-thread library, the LWP appears to be a virtual processor on which the application can schedule a user thread to run. Each LWP is attached to a kernel thread, and it is kernel threads that the operating system schedules to run on physical processors. If a kernel thread blocks (such as while waiting for an I/O operation to complete), the LWP blocks as well. Up the chain, the user-level thread attached to the LWP also blocks.

One scheme for communication between the user-thread library and the kernel is known as scheduler activation. It works as follows: The kernel pro- vides an application with a set of virtual processors (LWPs), and the application can schedule user threads onto an available virtual processor. Furthermore, the kernel must inform an application about certain events. This procedure is known as an upcall. Upcalls are handled by the thread library with an upcall handler, and upcall handlers must run on a virtual processor.